𝚃𝚎𝚡𝚃𝚎𝚕𝚕𝚎𝚛

𝚃𝚎𝚡𝚃𝚎𝚕𝚕𝚎𝚛

[](https://oleehyo.github.io/TexTeller/)

[](https://arxiv.org/abs/2508.09220)

[](https://huggingface.co/datasets/OleehyO/latex-formulas-80M)

[](https://huggingface.co/OleehyO/TexTeller)

[](https://hub.docker.com/r/oleehyo/texteller)

[](https://opensource.org/licenses/Apache-2.0)

https://github.com/OleehyO/TexTeller/assets/56267907/532d1471-a72e-4960-9677-ec6c19db289f

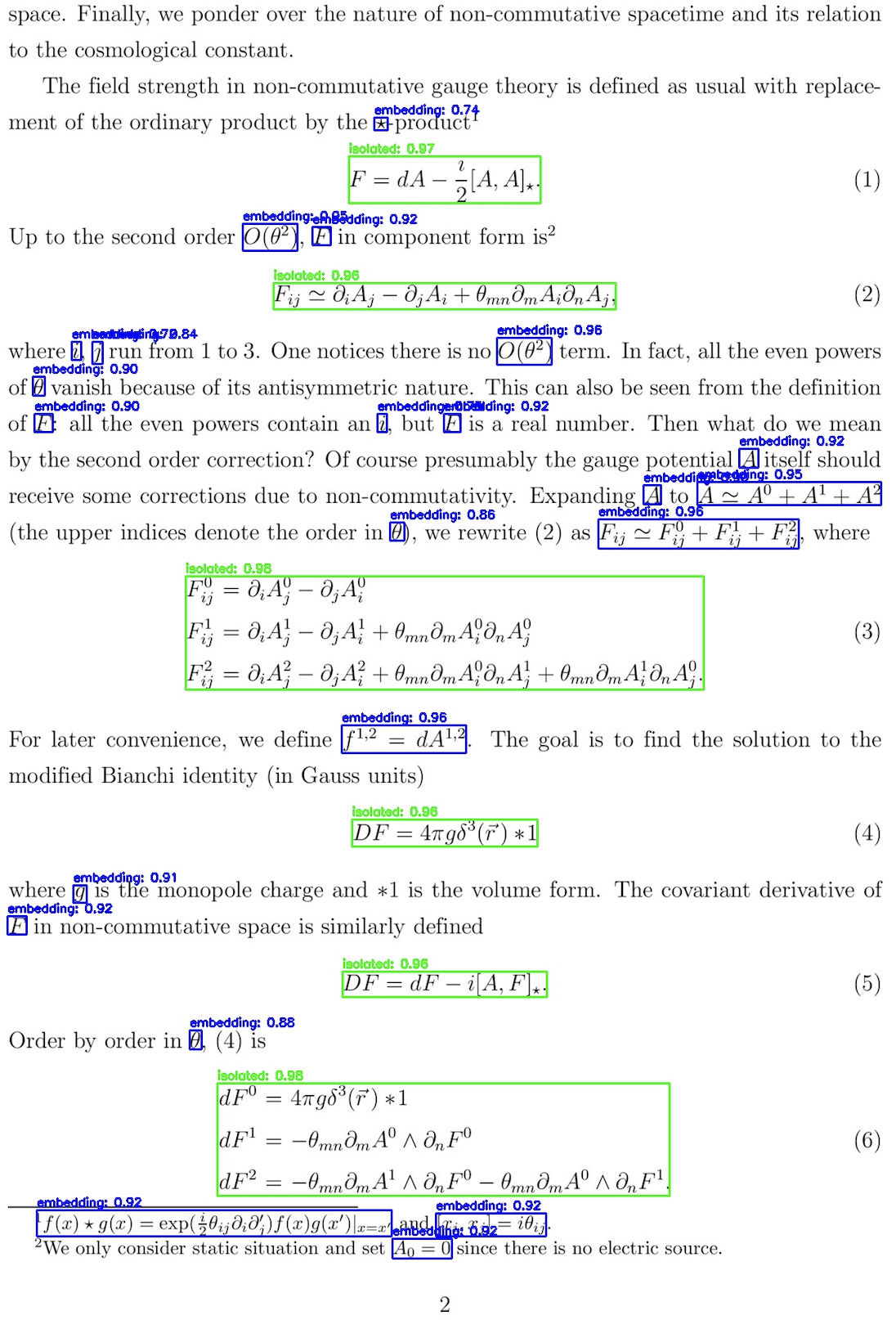

TexTeller is an end-to-end formula recognition model, capable of converting images into corresponding LaTeX formulas.

TexTeller was trained with **80M image-formula pairs** (previous dataset can be obtained [here](https://huggingface.co/datasets/OleehyO/latex-formulas)), compared to [LaTeX-OCR](https://github.com/lukas-blecher/LaTeX-OCR) which used a 100K dataset, TexTeller has **stronger generalization abilities** and **higher accuracy**, covering most use cases.

>[!NOTE]

> If you would like to provide feedback or suggestions for this project, feel free to start a discussion in the [Discussions section](https://github.com/OleehyO/TexTeller/discussions).

---